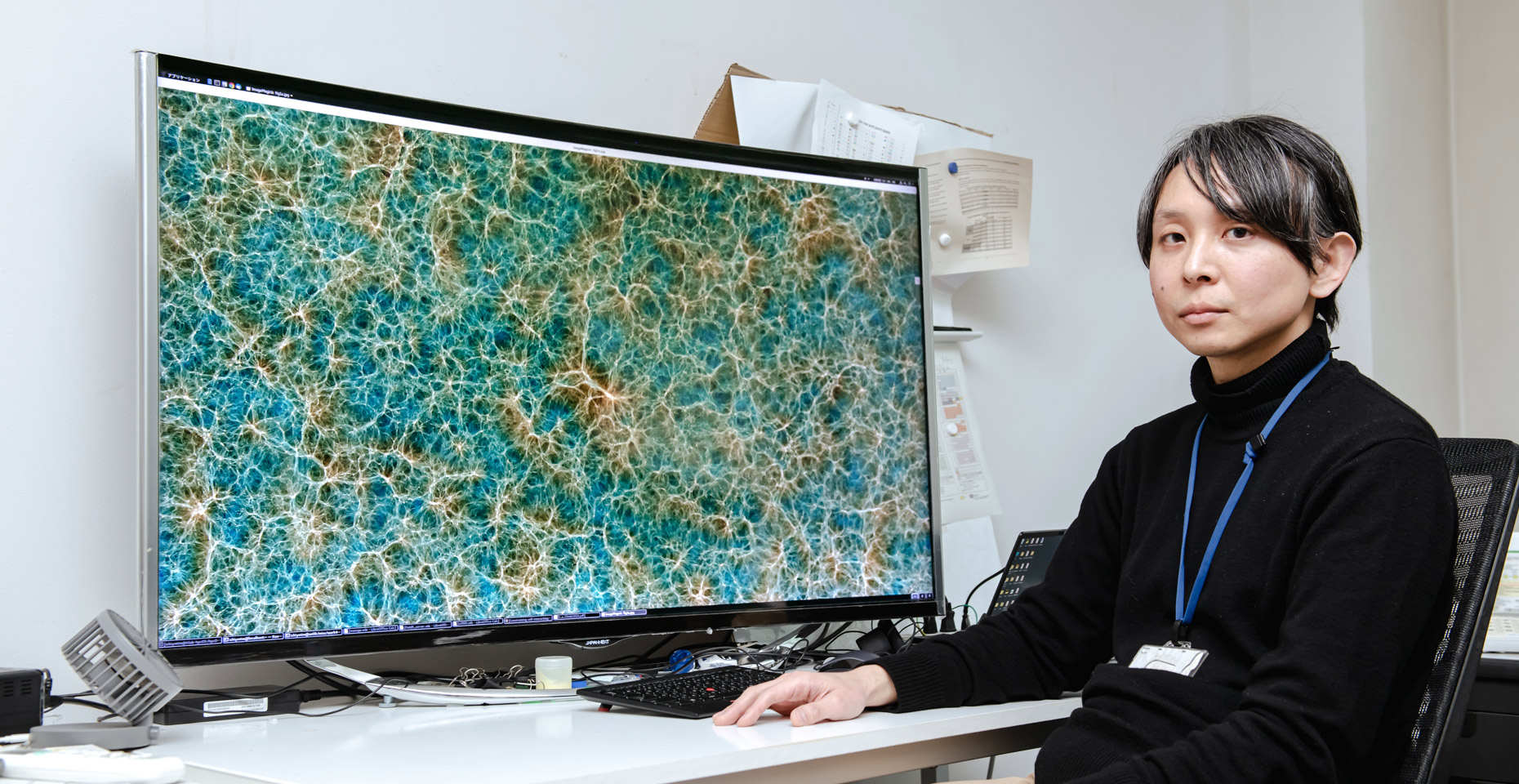

This universe, believed to have originated from the Big Bang 13.8 billion years ago, is the subject of research at Chiba University. The focus is on unraveling the evolution process from its beginning to the present day. Associate Professor Tomoaki Ishiyama from the Digital Transformation Enhancement Council has achieved the world’s largest simulation of dark matter structure formation, making the data widely available on the internet. The simulation movie has already been viewed 300,000 times, just 18 months after its release. In recognition of these accomplishments, he was honored with Chiba University Award for Distinguished Researcher in 2022.

We had the opportunity to interview Associate Professor Ishiyama, who continues his research in computer-based reproduction of the universe, about the source of his passion and his life as a researcher.

*Dark Matter: An unidentified matter believed to constitute the majority of the universe’s material composition, interacting with matter only through gravity.”

An unexpected encounter led him to his current research themes

You chose this research theme because you had an interest in space for a long time?

I believe that many people decide on their desired field of study and the research laboratory they wish to join during the university entrance examination stage. However, I was not that type of person. When I entered the University of Tokyo, I chose the Department of General Systems Studies, which allowed me to study a wide range of subjects without being limited by specific disciplines. This choice ultimately led me to my current research path.

In the laboratory I belonged to, our focus was on calculations performed by connecting multiple computers. While the scale was much smaller compared to supercomputers, the experience of utilizing hardware developed within our laboratory for accelerating gravitational calculations has proven valuable in my subsequent research endeavors.

Around the same time, there was a significant technological advancement in supercomputers. An environment for high-speed processing of large volumes of data was just beginning to be established. Naturally, this led to a shift towards conducting simulations using supercomputers.

Why is a simulated catalog of the universe necessary?

What are simulations of cosmic evolution and a catalog of the universe?

In my research, I simulate the evolution of the universe over a span of approximately 13.8 billion years, from the moment of its birth (the Big Bang) around 100 million years ago to the present day. It involves defining a specific region in space, setting the number of particles to be simulated within that region, and calculating the changes that occur over time according to the laws of physics. The initial fluctuations in particle density give rise to the subsequent formation of stars, galaxies, and black holes. If the simulation closely resembles the observed universe, it allows us to imagine that the early universe likely existed in a similar state.

Currently, we are working on cataloging the universe by compiling data observed at various observatories worldwide. This cataloging process involves organizing and summarizing the necessary information, such as the location of astronomical objects. The term “Catalog” shares the same etymology as mail-order catalogs. In the field of space, well-known catalogs include the “planet catalog” and “debris catalog” (providing information on the size and location of space debris). Through these efforts, everything existing in outer space is being observed and cataloged. Advancements in space telescopes have enabled the observation of fainter and more distant astronomical objects. As observation technology continues to progress, the acquired information will be further cataloged.

The simulated catalog can serve as a pathway to uncover the hidden truths within the universe

So, the question is, why do we conduct simulations and create simulated catalogs? One reason is to evaluate systematic errors in observations. Outer space exhibits variations in molecular gas density, the raw material for stars: Some regions have high gas density, and others have none at all. As such, space can be more diverse than one can imagine. Since the observable area is limited, we cannot determine the nature of the variations corresponding to the observed region. Thus, by performing pseudo-observations using simulated catalogs and comparing them with actual observation results, we can make informed guesses about the corresponding irregularities.

Another reason is to gain insights closer to the truth by examining the discrepancies between theoretical predictions and measured values. Dark matter, although invisible, plays a vital role in shaping the structure of the universe. The study of dark matter evolution and simulations of dark matter halos, massive objects formed through gravity, provide crucial clues in unraveling these cosmic mysteries.

Simulation advances with computers, sharing a fate intertwined with challenges and contemplation

Space simulation using a supercomputer ‘ATERUI II’ at the National Astronomical Observatory of Japan

An example of how supercomputers are utilized in our everyday life might be the simulation of COVID-19 droplet and aerosol diffusion using Fugaku. However, what many may not realize is that even the weather forecasts we see on TV daily, which rely on remarkable precision, would not be possible without the aid of supercomputers.

The study of the ‘Dark Matter Halo,’ which I find intriguing, falls into the same category as weather forecasting, as it involves meticulously calculating the temporal changes in particle movements within a specific area, adhering to the laws of physics.

The major distinction lies in the physical scale involved. The expanse of the target area and the timeline span orders of magnitude beyond comprehension. To handle such vast amounts of data, a multitude of particles is necessary, and the advancement in hardware performance significantly augments the information processing capacity.

The introduction of ‘Aterui II’ and the transition from “Kei” to “Fugaku” have exponentially increased the volume of data that can be processed in a single calculation. Concurrently, fine-tuning the computational programs has become crucial and itself a subject of study.

The simulation requires diligent effort in contemplating various aspects

The key to maximizing the performance of a supercomputer lies in the design of calculations at the ‘node’ level. Even the proximity of related data in memory to the CPU can influence the speed of computations.

For instance, if a computation instruction operates on values in neighboring nodes, but the subsequent instruction requires retrieving values from nodes located 100 positions away, the communication time increases by a factor of 100. While this may be negligible for a few calculations like ten times or 100 times, in simulations like the one involving a complex dark matter halo, with the evolution of 100 million particles influencing one another, computational demands rise significantly. The computational complexity scales with the product of the number of particles and their logarithm, thus highlighting the impact of transmission time between nodes on computation speed and electrical power consumption.

Improving computational efficiency requires meticulous consideration of the laterally ‘invisible’ arrangement of nodes, demanding steady and methodical work.

*In the realm of supercomputers, the term “node” refers to a unit of hardware that represents a “knot” or “junction.” Typically, it encompasses a group of CPUs and memory, all operating under a single basic software (OS). A supercomputer comprises multiple nodes interconnected via a high-speed network.

Being able to repeat trial and error with unwavering persistence and endless perseverance

What are the essential factors or actions required to sustain research and achieve meaningful results?

In my lab, I teach students what they need for their research, and no special talent is required. Since it is cutting-edge research, having basic academic skills and fundamental knowledge is more important than extensive preparation.

Furthermore, it is essential to be able to continue calmly, even if things don’t go well, without being disheartened by the absence of reactions or praise. Finding interest and joy in the continuous process of circular thinking is important.

During interviews and similar situations, I am often asked questions about “the qualities required of researchers?” or “fateful encounters with research topics, mentors, rivals, and experiences of setbacks.” However, I don’t have any particularly dramatic stories to share. When it comes to research, if the expected results are not obtained, I diligently investigate the cause, and if it is successful, I consider further improvements. It is a repetitive cycle. I believe that research is a process of continuous trial and error.

Designing research is an irresistible attraction

What are the captivating aspects of research that you wish to share with young researchers?

As the circumstances surrounding young researchers become increasingly challenging year by year, I feel somewhat uneasy about recommending young people to pursue a career in research. Nevertheless, there is a charm to research that I would like to convey to them: Designing your own investigations and embarking on the quest to unravel the mysteries of the universe is an irresistible attraction.

As our research involves the use of a supercomputer, the lifestyle rhythm may not differ much from that of a typical office worker. The scales vary, ranging from individual research projects to international collaborations.

In my laboratory, fourth-year undergraduate students have the opportunity to engage with supercomputers. A supercomputer serves as a theoretical telescope, granting us a glimpse into the unexplored realms of the universe. In the year 2022, eight students are part of our team, and we also welcome researchers from overseas. I aspire to create an environment where individuals who are eager to embrace challenges can be provided with opportunities.

Recommend

-

When Pain Speaks: Tackling Endometriosis Through Medicine, Research, and Outreach

2025.06.24

-

‘Synergistic Campus Evolution with the Community’ Chiba University Design Research Institute (Part 1): The Entire Campus as a ‘Design Experiment Space’

2023.12.21

-

Navigating the Society-driven Research: PPI and the Quest for Research Integrity

2025.02.27