Researchers develop a novel topology-aware multiscale feature fusion network to enhance the accuracy and robustness of EEG-based motor imagery decoding

Motor imagery electroencephalography (MI-EEG) is crucial for brain-computer interfaces, serving as a valuable tool for motor function rehabilitation and fundamental neuroscience research. However, decoding MI-EEG signals is extremely challenging, and traditional methods overlook dependencies between spatiotemporal features and spectral-topological features. Now, researchers have developed a new topology-aware method that effectively captures the deep dependencies across different feature domains of EEG signals, ensuring accurate and robust decoding, paving the way for more brain-responsive technology.

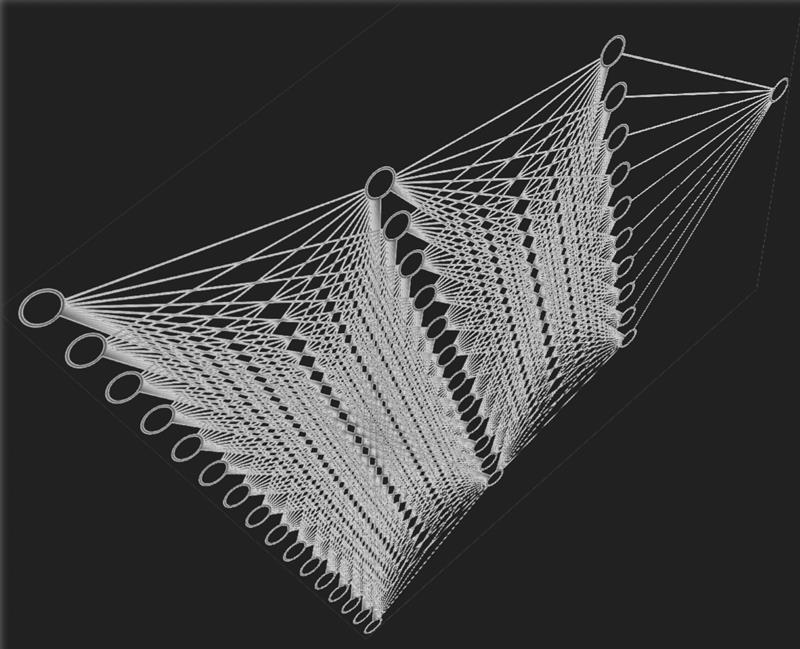

Image title: Topology-Aware Multiscale Feature Fusion Network for EEG-Based Motor Imagery Decoding

Image caption: The proposed topology-aware decoding approach captures the dependencies between different feature domains, ensuring robust and more accurate decoding of electroencephalography (EEG) signals.

Image credit: DancingPhilosopher via Creative Commons Search Repository

Image source: https://openverse.org/image/bf1742e2-7aff-4823-88db-781f98e32031?q=Neural+network&p=7

Image license: CC BY-SA 4.0

Usage restrictions: Credit must be given to the creator. Adaptations must be shared under the same terms.

Electroencephalography (EEG) is a fascinating non-invasive technique that measures and records the brain’s electrical activity. It detects small electrical signals produced when neurons in the brain communicate with each other, using electrodes placed at specific locations on the scalp that correspond to different regions of the brain. EEG has applications in various fields, from cognitive science and neurological disease diagnosis to robotic prosthetics development and brain-computer interfaces (BCI).

Different brain activities produce unique EEG signal patterns. One important example is motor imagery (MI)—a cognitive process in which specific brain regions are activated just by imagining movements, without any actual physical motion. This process generates stable and distinct EEG patterns. MI-EEG is a crucial component of BCI systems, serving as a valuable tool for prosthetic control and neurorehabilitation research. However, decoding MI-EEG signals is extremely challenging due to their low signal-to-noise ratio, high nonlinearity, and variability over time.

Traditionally, researchers have relied on machine learning methods to extract the temporal, spatial, and spectral features (frequency distribution and power variations) of MI-EEG signals for decoding. Recently, deep learning models have shown some promise for decoding MI-EEG signals; however, they still face several challenges in accurately capturing the complex nature of EEG data.

To overcome these challenges, Ph.D. student Chaowen Shen and Professor Akio Namiki, both from the Graduate School of Science and Engineering, Chiba University, Japan, have developed an innovative topology-aware multiscale feature fusion (TA-MFF) network. “Current deep learning models primarily extract spatiotemporal features from EEG signals, overlooking potential dependencies on spectral features,” explains Prof. Namiki. “Moreover, most methods extract only shallow topological features between electrode connections, limiting a deeper understanding of the spatial structure of EEG signals. Our approach introduces three new modules that effectively address these limitations.” Their study was made available online on September 26, 2025, and will be published in Volume 330, Part A of the journal Knowledge-Based Systems on November 25, 2025.

The TA-MFF network comprises a spatiotemporal network (ST-Net) and a spectral network (S-Net). It enhances MI-EEG signal decoding capability through the coordinated operation of three main modules: spectral-topological data analysis-processing (S-TDA-P) module, the inter-spectral recursive attention (ISRA) module, and the spectral-topological and spatiotemporal feature fusion (SS-FF) unit. Notably, the modules S-TDA-P and ISRA operate in parallel within the S-Net.

In S-Net, the Welch method is first used to convert the EEG signals into power spectral density representations, measuring how signal power is distributed across different frequencies. This helps reduce noise and simplifies the data. Then, the S-TDA-P module leverages persistent homology—a powerful computational tool for studying the topology of data—to extract deep spectral-topological relationships between different EEG electrodes and capture persistent patterns within the signals. Meanwhile, the ISRA module models correlations between the different frequency bands, highlighting key spectral features while suppressing redundant information.

Finally, the SS-FF unit integrates topological, spectral, and spatiotemporal features across the network. Unlike traditional approaches, where features from different domains are simply concatenated, it utilizes a two-step fusion strategy, first merging topological and spectral features and then integrating them with spatiotemporal features. This approach captures deep dependencies across feature domains.

As a result of these innovative techniques, the TA-MFF network achieves excellent classification performance on MI-EEG decoding tasks, outperforming state-of-the-art methods.

“Our approach holds strong potential for robust and efficient EEG-based MI decoding,” remarks Prof. Namiki. “I was interested in understanding how the brain controls movement and how this knowledge could be utilized to help people who are immobile. This research could help people control computers, wheelchairs, or robotic arms just by thinking, helping those with movement difficulties to live more independently.”

Overall, this innovative technique represents a major step toward more accurate and robust MI-EEG decoding, making everyday technology respond more naturally to our brain signals, including thoughts and emotions.

To see more news from Chiba University, click here.

About Professor Akio Namiki from Chiba University, Japan

Dr. Akio Namiki is currently a Professor at the Department of Mechanical Engineering, Graduate School of Engineering, Chiba University, Japan. He obtained his Master’s and Ph.D. from the University of Tokyo in 1996 and 1999, respectively. At Chiba University, he also leads the Namiki Laboratory, which focuses on the development of advanced computer vision systems. His research work has received over 3,300 citations to date. His research interests include high-speed vision systems, sensor fusion, and robotic manipulators, among others.

Funding:

This work was supported by the JST SPRING program (Grant Number JPMJSP2109).

Reference:

Title of original paper: A topology-aware multiscale feature fusion network for EEG-based motor imagery decoding

Authors: Chaowen Shen and Akio Namiki

Affiliations: Graduate School of Engineering, Chiba University, Japan

Journal: Knowledge-Based Systems

DOI: 10.1016/j.knosys.2025.114540

Contact: Akio Namiki

Graduate School of Engineering, Chiba University, Japan

Email: namiki@faculty.chiba-u.jp

Academic Research & Innovation Management Organization (IMO), Chiba University

Address: 1-33 Yayoi, Inage, Chiba 263-8522, Japan

Email: cn-info@chiba-u.jp

Recommend

-

‘Synergistic Campus Evolution with the Community’ Chiba University Design Research Institute (Part 1): The Entire Campus as a ‘Design Experiment Space’

2023.12.21

-

Navigating the Society-driven Research: PPI and the Quest for Research Integrity

2025.02.27

-

When Pain Speaks: Tackling Endometriosis Through Medicine, Research, and Outreach

2025.06.24